AI Readiness: A Strategic Playbook for Higher Education Leaders

.jpeg)

The New Academic Imperative

Higher education is undergoing a structural transformation shaped by a multitude of variables. Chief among these are: accelerating student expectations, emerging AI tools and capabilities, and the increasing fragility of traditional support systems.

While over 72% of institutions report investing in AI, only 21% feel confident in their preparedness to use it ethically, securely, and effectively. Meanwhile, faculty workloads are rising, student support needs are more complex and urgent, and Fall term - particularly its first 6 weeks - has become the most decisive period for student engagement and retention.

“Fall readiness” no longer means onboarding students or polishing LMS shells.

It means being institutionally ready to support, engage, and intervene at scale, before learners slip away.

AI solutions promise to help, but only if deployed responsibly. The stakes are high:

- A poorly configured chatbot can erode trust.

- An unreviewed AI grader can damage academic integrity.

- A fragmented governance process can halt innovation before it starts.

At the same time, institutions that have deployed ethical, scalable AI solutions across their tech stack are showing what’s possible for students and faculty:

- AI Support Assistants have cut Tier-1 support tickets by 30–50%

- International students access the help they need, with AI Support tools providing guidance in 107 languages.

- Faculty reclaims time for teaching instead of overtime spent in grading: implementing AI Feedback & Grading tools helped them cut on admin tasks, with students preferring feedback enhanced with AI 84% of the time.

Most interestingly, these results aren’t theoretical - they are happening in real-time, within 4 to 6 weeks of implementation.

What This Guide Delivers

This guide is a practical playbook for institutional leaders ready to make Fall 2025 their most AI-ready term yet.

You’ll find:

- A strategic roadmap grounded in governance, equity, and outcomes

- A tour of the competitive landscape with clear selection criteria

- Pre-built checklists, dashboards, policies, and messaging templates

- Real-world examples from institutions using LearnWise to scale support with integrity, safety and security in mind

Whether you’re a Vice President of Student Success, a Provost, a CIO, or a Director of Teaching and Learning, this document will help you lead your institutional AI readiness roadmap with clarity and confidence.

Chapter 1: A New Era of AI in Education

Reading the Room: Institutional Sentiment & Strategy

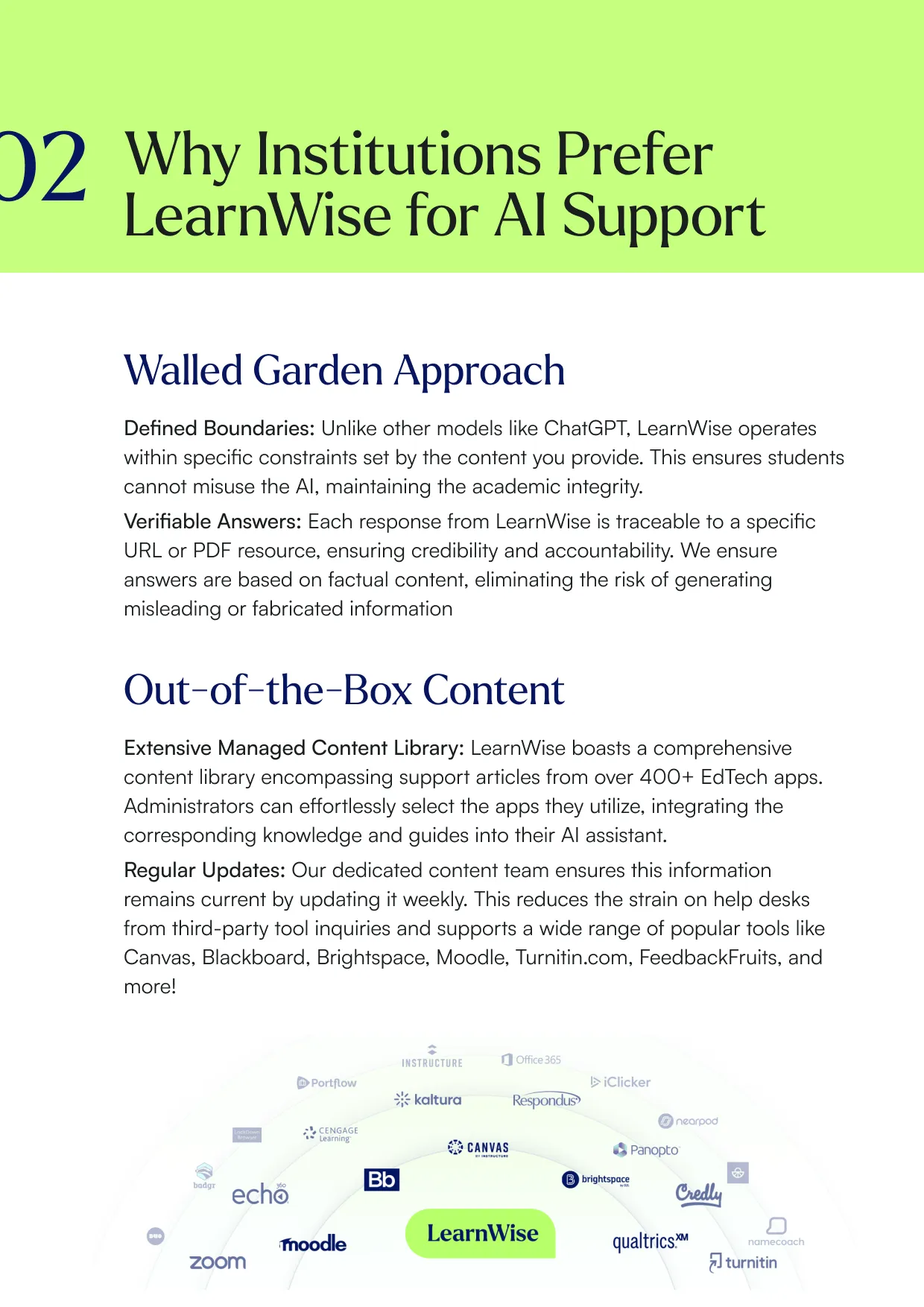

In 2025, AI adoption in higher education is no longer a fringe activity; it’s an imperative. According to the EDUCAUSE 2025 AI Landscape Study, 80% of institutions are now actively experimenting with AI, but fewer than half report having formal governance frameworks in place. This tells us an ecosystem is in motion, but policy and action are not yet aligned.

The AAC&U and Elon University “Leading Through Disruption” report echoes a similar tension: top-down enthusiasm is outpacing institutional readiness. Senior leaders see the promise of AI, but decentralized implementations and uncoordinated pilots can stall momentum, lead to faculty skepticism, and create uneven student experiences.

The 2025 UPCEA/EDDY AI Readiness Report surfaces the three biggest challenges to scaling AI across institutions:

- Lack of alignment between leadership vision and operational execution

- Inconsistent procurement and implementation strategies

- Insufficient infrastructure to support institution-wide deployment

To address these challenges, institutions must move from AI experimentation to orchestration. Successful implementations start with a shared strategy: a vision co-owned by academic affairs, IT leadership, teaching and learning centers, and student support teams. The goal? Focus AI investments on high-impact use cases, such as academic support, administrative help, and 24/7 accessibility, especially in the first six weeks of the semester, when student engagement and retention outcomes are most malleable.

Choosing Ethical AI: Guiding Frameworks for Institutions in Education

AI governance in higher education cannot be an afterthought. As the EDUCAUSE “Ethics Is the Edge” framework asserts, ethical alignment is what differentiates responsible adoption from reactive deployment. That means moving beyond tool approval lists toward a living governance model.

Key principles include:

- Faculty Autonomy: Allow instructors to choose how AI appears in their teaching workflows.

- Student Voice: Include learners in governance structures. Co-create policies that reflect their needs and expectations.

- Transparency: Maintain public AI policy hubs where students and staff can see what’s approved, how it’s being used, and how to raise concerns.

- Flexibility: Recognize that tools, and the risks they pose, will evolve. So too must your institutional approach.

Operationalizing AI Readiness for Educational Institutions

People, process, and platform: these are the levers of institutional readiness.

The UPCEA/EDDY Readiness Report reveals that while AI excitement is high, 44% of institutions lack any formal AI training plan for staff. Readiness is not just about choosing tools, but about enabling people to use them responsibly and effectively.

Your operational readiness strategy should include:

- AI Progress Mapping: Where is AI currently being used (formally or informally)? What’s working?

- Cross-Functional Squads: Form teams that include faculty, IT, student affairs, and accessibility leaders.

- Use Case Triage: Use an impact vs. feasibility matrix to prioritize AI initiatives (start with high-impact, low-complexity areas).

- Training & PD: Launch professional development focused on prompt literacy, bias mitigation, and integration best practices.

Institutional Tech Jumps: AI Infrastructure & Investment in Education

According to the EDUCAUSE Horizon Report 2025, institutions that thrive with AI have a shared characteristic: they’ve modernized their infrastructure. From LMS connectivity to knowledge base control to user permissions, every technical choice becomes a strategic one.

Your readiness plan must include:

- Audit of Technical Debt: What legacy systems are blocking integration or slowing down data access?

- Integration First Strategy: Platforms should support LTI 1.3, SSO, and RBAC. APIs should be accessible and documented.

- Role-Aware AI Tools: AI that knows the difference between a student and an instructor, and personalizes support accordingly.

- Analytics Pipelines: Without good data and clear baselines, there is no way to measure impact.

Fall readiness is as much about digital foundations as it is about strategic priorities.

Chapter 2: Ethical Guardrails in AI for Higher Education

Selecting & Vetting AI Solutions

Not all AI platforms are created equal. While generative models like ChatGPT power many capabilities, institutional readiness depends less on the model, and more on what wraps around it. In higher education, meeting high security standards, ensuring data privacy and safeguarding academic integrity are all paramount. Choosing ethical, responsible AI solutions is essential.

When choosing your AI solution, key selection questions to ask vendors include:

- Does it integrate deeply with your LMS, not just sit on top of it?

- Can it ingest your institutional knowledge and return context-aware answers?

- Can your IT, T&L, and Accessibility teams control how it behaves?

- Does it support human-in-the-loop workflows for grading, tutoring, or service interactions?

- Are usage costs predictable and transparent, avoiding token-based volatility?

Look beyond “ChatGPT-like” experiences. Prioritize platforms that are:

- LMS-native

- Knowledge-governed

- Role-aware

- Secure and exportable

- Transparent in performance and updates

AI Adoption Timelines in Higher Education

Over time, we have discovered institutions are most successful in their AI adoption journey when they work to implement flexible, secure AI policies that allow for innovation while keeping institutional guardrails on data for students and faculty as a top priority. Additionally, change management is key: every stakeholder, especially faculty, needs time, trust and training to embrace AI as a partner in their day-to-day tasks, a helper and assistant above everything else.

We already know that Gen-AI tools are used by up to 92% of UK university students, while up to 61% of faculty report having used AI tools in their teaching. Add on the fact that 93% of higher-ed professionals plan to expand their use of AI in the next two years, and the trends become evident: it is not a matter of if AI will become an institutional policy focus, but of when.

The advent of AI in higher education does not come without its (fair) concerns: staff is worried about bias and data privacy, with 49% citing potential bias in AI-driven decisions as a barrier to potential adoption and 59% worrying that data privacy and compliance will be a challenge.

Enter change management: a key piece in the puzzle that is institutional AI adoption. It is imperative to follow two key missions: helping your learner community and staff embrace AI through direct student and faculty involvement, and involving learners and educators early. Whether it be through intensive applications such as needs assessments, focus groups, hackathons or test labs or non-intensive applications such as polls and naming competitions of your AI tools, these approaches make for a soft landing when AI technology is implemented.

In this process, change management is key. Every stakeholder, especially faculty, needs time, trust, and training to embrace AI as a partner, not a threat.

Currently, Institutions are most successful when they frame AI adoption in three overlapping arcs:

- Quick Wins (0–6 weeks):

Launch AI for administrative FAQs, course support, and student onboarding,high-volume, high-impact areas with clear ROI. - Scalable Workflows (1–2 terms):

Expand AI into grading support, student interventions, and campus knowledge automation. - Continuous Optimization (1 year+):

Use analytics to refine prompts, detect knowledge gaps, and enhance student journeys.

Where can Educational Institutions Deploy AI First?

Institutions looking to deploy AI solutions can use an impact x feasibility matrix to triage their launch sequence.

Start where:

- Volume of inquiries is high

- The nature of information is repetitive

- Risk of error is low (e.g., administrative Q&A)

- Students already expect 24/7 access (e.g., orientation, advising, financial aid)

Top fall deployment zones:

- Enrollment and onboarding support

- Course-specific tutoring inside the LMS

- FAQ routing for financial aid and campus services

- Rubric-aligned feedback generation

Tools like LearnWise help prioritize these zones by providing pre-built integrations and multilingual agents trained on institutional content.

Going Beyond ChatGPT: Vendor Value Checklist for AI solutions in Education

Considering institutional guardrails, privacy concerns and data protection standards, we’ve compiled what should be on your must-have list when considering vendors in the space:

- Deep integrations to remove context-switching

- A clear overview of data, sourcing institutional data to reduce hallucinations

- Predictable pricing model (no usage-based spikes)

- Data exportability for analytics and audit trails

- Clear permissions and administrative control

- Real-world references from campuses like yours, not just prototypes

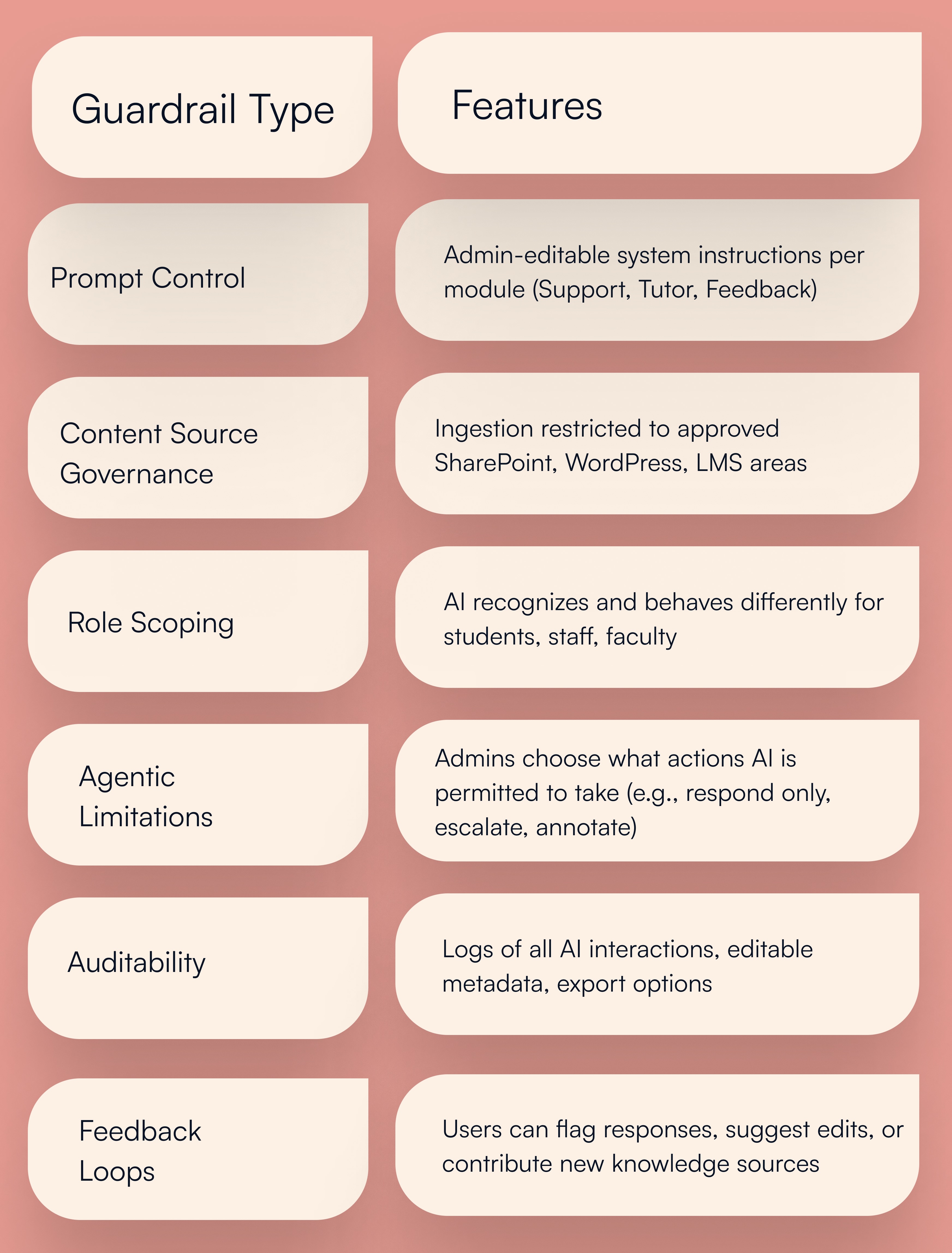

Institutional Guardrails & Control Points

Guardrails are what ensure that AI tools operate the way you intend, not just the way they’re built.

When building your AI governance framework, consider the following:

- System prompt control: What rules guide the AI’s tone, personality, and scope?

- Data usage visibility: What sources are pulled? What’s excluded? What’s private?

- Role-based behavior: Can the AI distinguish staff from student requests?

- Agentic permissions: Can you define what actions (e.g., answering vs. initiating tasks) the AI is allowed to take?

Operationalizing these guardrails is possible in a few ways:

- Creating syllabus and onboarding templates for faculty

- Developing AI behavior logs accessible to administrators

- Enabling version control for prompts and configurations

Deploying AI in Education: Evaluating Trust, Risk & Data Stewardship

Deploying AI in Education: Evaluating Trust, Risk & Data Stewardship

For institutions adopting AI, trust is non-negotiable. While speed and functionality often dominate early conversations, long-term success depends on data integrity, control, and transparency. Leaders must ensure that AI systems, particularly those interacting with students or operating within academic workflows, adhere to the same rigorous standards expected of any enterprise system in higher education.

Governance isn’t only about policy documents, it’s about how technology behaves under real-world pressure. What happens when an AI tool ingests outdated content? Who controls the system prompt that shapes its responses? Can faculty or administrators audit what the AI said to a student last week?

To answer these questions with confidence, institutions should embed trust and data stewardship reviews into every phase of AI procurement and deployment. Due diligence should cover:

- Sub-processors and data routing

- GDPR/FERPA alignment

- SSO and RBAC configuration

- Prompt version history

- Audit logs and usage analytics

- Export and integration with BI dashboards

Look for vendors with transparent compliance documentation (e.g., ISO 27001, SOC 2), a published list of sub-processors, and modular control over each feature. If a platform can’t provide this, it’s not enterprise-ready.

Download the study

Download the whitepaper

Chapter 3: Roadmap for Education Leaders: The AI Readiness Checklist

The AI Readiness Framework: From Strategy to Systems (Macro → Meso → Micro)

AI adoption in higher education is no longer an experimental edge case, it’s a cross-campus evolution. But many institutions still treat AI as either a technical procurement or an isolated pilot. That’s why so many efforts stall after a single chatbot rollout or a one-off grading experiment.

The reality is, AI readiness is a strategic, operational, and pedagogical shift, not just a toolset. Institutions that successfully scale AI adoption understand that readiness must be coordinated across three levels:

- Macro: Strategic vision, visible governance, and leadership alignment

- Meso: Cross-departmental enablement and operational planning

- Micro: Direct interactions with learners, faculty, and staff through course-aware, role-aware tools

This tiered framework, drawn from the Quo Vadis Study, the EDUCAUSE Horizon 2025 framework, helps institutional leaders sequence their AI strategy in manageable, mission-aligned steps.

MACRO: Strategic Alignment & Visible Governance

At the macro level, AI strategy must align with the institution’s mission, values, and regulatory obligations. Governance isn’t just a checklist: it’s your trust infrastructure. Stakeholders should understand not only what tools are approved, but why, how, and by whom they are monitored.

Key actions:

- Establish an AI governance council with cross-functional membership (Academic Affairs, Student Success, IT, Legal, Accessibility)

- Publish institutional AI principles linked to your mission (equity, access, integrity)

- Launch a public AI resource hub with approved tools, policies, use cases, and training

- Conduct annual risk and opportunity assessments as part of your digital roadmap

Pro tip: AI policies shouldn’t live in a PDF buried on your intranet. Make them visible, revisable, and co-owned by students, faculty, and staff. Review every 6–12 months.

MESO: Operational Integration & Staff Enablement

At the meso level, strategy becomes implementation. This is where institutional ambition often meets friction, especially if departments lack clarity on roles, support, or training.

Key actions:

- Assign AI adoption leads within each unit (T&L, Libraries, Student Services, IT, etc.)

- Create shared implementation roadmaps that span departments and track deliverables

- Align deployments to term-based academic cycles (e.g., PD in summer, rollout in Fall)

- Provide professional development pathways so staff and faculty can use AI safely and creatively

Did you know? Only 42% of institutions surveyed by UPCEA in 2025 have AI-specific faculty PD programs. Those that do report 3x higher faculty confidence in AI usage.

MICRO: Role-Aware, Course-Aware Tools

At the micro level, AI interacts with people: students asking support questions, instructors reviewing assignments, advisors recommending interventions. At this layer, contextual intelligence becomes critical. Generic tools won’t cut it.

Key actions:

- Prioritize LMS-native tools that ingest course content and policy documents

- Ensure role differentiation (e.g., student vs. faculty vs. admin) is built into the platform

- Enable prompt customization and control by faculty, departments, or governance bodies

- Support human-in-the-loop workflows,AI suggests, humans decide

- Establish feedback loops so inaccurate or unhelpful responses improve system-wide

LearnWise delivers on all of the above with:

- Seamless LMS, SIS, Sharepoint, helpdesk and 400+ other edtech integrations

- Multilingual AI support agents tied to institutional knowledge

- Role-scoped query handling that respects FERPA and user context

- Feedback generation tools aligned to rubrics, tone preferences, and course outcomes

Ethical, Scalable Implementation: The AI Implementation Checklist

Implementing AI successfully in higher education isn’t just about selecting the right tools. It’s about orchestrating governance, compliance, stakeholder alignment, and measurable outcomes in a coordinated and time-sensitive way. As institutions prepare for 2026, this checklist distills the core readiness criteria that enable fast, responsible AI deployment across academic and administrative contexts.

Each section of this checklist maps directly to the AI Readiness Framework (Macro → Meso → Micro) and ensures your rollout is not only technically sound, but pedagogically aligned, ethically governed, and operationally measurable.

Use this as both a launch playbook and a shared tracking tool across departments.

Governance & Ownership

AI tools are only as trustworthy as the governance surrounding them. By establishing cross-functional ownership, via an AI council or steering group, you create institutional alignment and accountability. Publishing your policy stance and rollout plan not only builds internal trust, but also ensures transparency for students, faculty, and external stakeholders.

- Cross-functional AI council established

- Public AI policy/resource site launched

- Communication plan for stakeholders (students, faculty, staff)

Why it matters: Without a clear owner and public-facing strategy, AI adoption remains fragmented and risks eroding trust.

Data & Compliance

Data security and user privacy are foundational to any AI initiative, especially those that touch student records or faculty workflows. Ensuring your vendors meet ISO, GDPR, and FERPA standards is non-negotiable. Activating SSO and RBAC ensures controlled, auditable access that respects role boundaries and institutional policies.

- Sub-processors and data flows reviewed

- GDPR, FERPA, ISO 27001 compliance validated

- SSO, RBAC, and audit logging activated

Why it matters: Compliance isn’t a box to check. It’s a daily operational safeguard against misuse, misconfiguration, and reputational risk.

Tool Vetting

Choosing an AI partner isn’t just about features, it’s about fit. Tools must integrate with your LMS, respect academic integrity policies, and support accessibility and inclusion standards. Sandbox testing and reference validation are critical for de-risking deployment and setting realistic expectations with stakeholders.

- RFP/RFI includes LMS data ingestion, prompt control, accessibility alignment

- Sandbox access and references required from all vendors

- Knowledge gap detection and analytics criteria evaluated

Why it matters: Platforms that can’t be tested, traced, or tuned aren’t viable at scale. Choose tools that work inside your systems, not around them.

Faculty & Staff Preparation

No AI rollout succeeds without buy-in from educators and front-line staff. Institutions must equip faculty with clear syllabus language, guidance on appropriate use, and opt-in training. Providing reporting pathways ensures that faculty can raise concerns and participate in governance, not feel bypassed by it.

- AI syllabus language and guidelines shared

- Onboarding sessions and opt-in workshops hosted

- Feedback/reporting mechanisms available to all users

Why it matters: Faculty are the bridge between institutional goals and student experience. Empowering them with clarity and control is essential.

KPI & Analytics Readiness

Measuring success is what separates pilots from scalable programs. Institutions should baseline critical metrics before launch, including time-to-resolution, deflection rates, and knowledge freshness, and share these KPIs with executive sponsors and academic leadership. Ongoing reporting ensures accountability and continuous improvement.

- Time-to-resolution for Tier‑1 inquiries baselined

- Role-based AI usage tracked

- Knowledge freshness and self-service metrics configured

- Deflection and escalation rates reviewed weekly

Why it matters: If you can’t measure it, you can’t manage it,or fund it next year. Strong metrics help prove value and improve strategy term over term.

LearnWise as a Reference Model

Institutions using LearnWise are already applying this framework. Here’s how:

AI Campus Support: Multilingual chatbots embedded in LMS (Canvas, Moodle, Brightspace) and institutional portals (SharePoint, WordPress), answering questions about enrollment, orientation, financial aid, and academic life, all powered by institution-approved content and knowledge governance.

AI Student Tutor: In-LMS study assistant that generates practice quizzes, guides students through difficult content, and explains concepts based on real course material, not generic web results. AI is role-aware and supports FERPA boundaries.

AI Feedback & Grader: LMS-native grading assistant that helps instructors generate feedback aligned to rubrics, institutional tone, and course-level learning outcomes. Allows human review and annotations, with embedded transparency.

Analytics: Dashboards reveal knowledge gaps, top queries, AI engagement by user type, and changes in time-to-resolution. This supports continuous improvement of both the tool and institutional processes.

LearnWise supports phased deployment, so institutions can start with one module (e.g., Support) and expand over time without redoing contracts, integrations, or training.

Chapter 4: AI Implementation in Weeks (Not Months)

AI deployment in higher education doesn’t have to follow the enterprise model of “9–12 months until value.” Institutions that implement successfully have a few things in common: pre-vetted tooling, clear guardrails, cross-functional teams, and above all, speed. Time-to-value is a competitive differentiator.

Why Speed Matters

According to EDUCAUSE QuickPolls, institutions are prioritizing internal resilience, especially in areas like strategic planning, student support services, and IT operations. With limited budgets, higher expectations, and rising student support complexity, every delay costs impact.

In fact, EDUCAUSE Horizon 2025 highlights “early Fall interventions” as a top area where AI can drive measurable gains in student engagement, retention, and support equity.

Launching before the start of term, and improving in the first six weeks, is now the readiness standard, not the goal.

The LearnWise Deployment Advantage

LearnWise is built for institutions that don’t have the luxury of long onboarding cycles or 10-person AI enablement teams. Here’s how it enables fast, secure, scalable implementation:

- LTI 1.3, SSO, and RBAC out of the box

- Pre-built connectors for LMS (Canvas, Brightspace, Moodle), SharePoint, WordPress, and ticketing systems (Zendesk, Freshdesk, Salesforce, etc.)

- Accelerated onboarding model:

Technical setup = 3–7 business days

Institutional onboarding (training, content upload, guardrail config) = 3–5 weeks - Dedicated academic solutions engineers with LMS, pedagogy, and IT background

- Staged rollout playbooks for pilot, phased expansion, or full institutional launch

Many LearnWise clients launch with small teams on the partner side, scaling across academic and administrative functions within the same semester.

Time-to-Value Timeline (6 Weeks)

.jpeg)

People–Process–Platform: The Operational Readiness Checklist

Fast, scalable AI implementation isn’t just about vendor selection, it’s about aligning the right people, refining your internal processes, and ensuring that your technology foundation can support intelligent, integrated systems. Successful LearnWise partners consistently highlight one lesson: operational readiness is what turns vision into velocity.

This checklist is designed to help implementation leaders (e.g., IT directors, T&L coordinators, project managers) prepare for a smooth, institution-wide launch that minimizes disruption while maximizing early impact.

People

The most well-designed AI tools will still fail without institutional ownership. That’s why AI readiness must start with cross-functional leadership and internal advocacy. From governance to early adopters, people make the rollout real.

- AI governance team formed and resourced

A cross-campus team with representation from Academic Affairs, IT, Student Success, Libraries, Accessibility, and Student Voice. - Functional leads named

Department-level owners who coordinate communication, training, and configuration in their respective areas. - Faculty champions and early adopters briefed

Identify instructors willing to pilot feedback tools or tutoring support, and loop them into product design conversations - Communications plan approved

Define internal messaging timelines for faculty/staff, as well as external messages for students.

Why it matters: Institutional AI launches are change management projects, not just tech rollouts. Clarity of ownership, communication, and peer advocacy ensures alignment and accelerates adoption.

Process

Even the most powerful platform will fall flat without the right workflows. Your support, academic, and policy processes must be mapped, tested, and reinforced to ensure that AI tools operate in harmony with human systems.

- Policies reviewed (privacy, syllabus, escalation, tone)

Confirm that your policies cover use of AI in instruction, support, and student-facing interactions. - Support workflows mapped for handoff/escalation

Define what happens when the AI can’t answer a question: who receives it, how it’s escalated, and how it’s tracked. - Chatbot and tutor tested with real content

Use real student queries and institutional documents to test how well the AI performs (before students touch it). - Live chat agents and helpdesk teams looped in

Ensure that Tier-1 and Tier-2 support staff know when they’ll be looped in, and how.

Why it matters: AI tools that operate outside of your institutional processes will frustrate users and fragment the student experience. Workflow alignment is what transforms novelty into real value.

Platform

Once people and process are ready, your platform setup ensures everything functions securely, contextually, and with measurable outcomes. Technical readiness includes integrations, configuration, and analytics that allow AI tools to function inside the learning ecosystem, not adjacent to it.

- LMS connector activated (Canvas, Brightspace, Moodle)

The system must be fully embedded. No extra logins, no tab-hopping. - SSO enabled and scoped by role

Students, faculty, and staff should each access the platform via institutional credentials, with role-specific permissions. - RBAC configured to separate student vs. instructor use cases

AI should behave differently depending on who’s interacting with it. - Knowledge ingestion feeds mapped and prioritized

Connect SharePoint, LMS, and other knowledge sources based on support demand (start with FAQs, policy pages, course docs). - Analytics hooks activated and baseline metrics collected

Track usage, resolution time, deflection rate, and student/faculty engagement by role and course.

Governance-in-the-Loop: Guardrails in Action

What to Expect in the First Term

The most successful LearnWise partners report:

- 30–50% Tier‑1 support deflection

- ~62% faster mean time-to-resolution for student queries

- 3–5 hours/week saved per instructor on feedback

- 10x faster time to launch compared to chatbot vendors or generic copilots

Institutions that measure KPIs weekly and iterate based on analytics see compound ROI, especially when using AI to triage early interventions for at-risk students.

Download the study

Download the whitepaper

Chapter 5: The Competitive AI for Education Landscape (Buyer’s Guide)

As AI adoption accelerates in higher education, institutions face a growing menu of solutions,from generic tutoring tools to enterprise case management systems. Choosing the right platform is no longer just a matter of functionality; it’s about fit, focus, and future-proofing.

To help institutions make informed decisions, we outline the four major categories of AI support solutions in 2025 and analyze how they differ across key dimensions.

The Four Categories of AI Support Platforms

Comprehensive AI Education Support Platforms: LearnWise AI

These platforms are designed specifically for academic and administrative AI support inside the institution's digital ecosystem. LearnWise is the leader in this category due to its:

- Deep LMS integration (and over 40+ tool integrations across institutional techs stacks)

- Ingestion of course content, policy docs, portals, and knowledge bases, reducing AI hallucination

- Role-aware AI that behaves differently for students, instructors, staff

- Rubric-aligned grading assistance native to LMS workflows

- Institution-wide analytics for knowledge gap detection, and ongoing management & improvement

- Fixed-fee licensing and week-scale implementation

Why LearnWise Wins for Responsible AI Adoption

Chapter 6: Common Objections to AI Implementation in Education

Even when AI adoption aligns with institutional goals, pushback is inevitable. IT leaders worry about data exposure. Faculty raise concerns about control and integrity. Finance teams ask about sustainability and return on investment. And governance bodies want assurance that ethical use isn’t an afterthought.

This chapter equips you to answer those questions, clearly, credibly, and with evidence.

Objection 1: How does the platform handle data privacy, role-based access, compliance, and third-party risk?

IT leaders get a full technical documentation package, including security architecture diagrams, compliance attestations, and incident response plans. Here are a few ways LearnWise meets high security standards:

- ISO 27001 certified with comprehensive security frameworks

- GDPR and FERPA compliant with strict boundaries around student data

- SSO (SAML, OAuth2) and RBAC to scope access and behavior by role

- Sub-processor transparency via a published trust portal

- Audit trails for every AI interaction and user action

- No data used to train LLMs; institutional data stays within your boundary

Objection 2: What’s the ROI? What improvements can we expect, and when?

- 30–50% reduction in Tier‑1 support tickets, as seen in multiple production institutions

- 62% improvement in Mean Time To Resolution (MTTR) for service interactions

- 3–5 hours per instructor saved weekly on grading and feedback

- 9–12 month payback period for institution-wide deployments

- Analytics dashboards to track engagement, self-service rates, deflection, and content gaps

LearnWise licenses are fixed-fee, avoiding the volatility of consumption-based AI pricing.

Objection 3: “Is it affordable and predictable?”

Will this create budget creep? How does it fit into our procurement framework?

- Annual flat-fee licensing, no usage-based billing, tokens, or overages

- Multi-year agreements align with budget cycles and offer discounted renewal

- Compatible with procurement frameworks like Chest (UK), HEERF (US), and state edtech contracts

- SaaS model includes all features, updates, and maintenance, no upcharges

LearnWise’s TCO is lower than enterprise CRM chat tools or standalone AI copilot add-ons when you account for integrations, training, and license sprawl.

Objection 4: “Will this disrupt faculty workflows?”

Will AI override instructor judgment or add new tech burden?

- LMS-native feedback assistant integrates into Canvas SpeedGrader and LMS views, and Brightspace Grader

- Instructor retains full control: Accept, edit, or reject AI suggestions

- No external logins or file uploads,everything works in existing grading flow

- Faculty guide AI tone, level, and rubric alignment with editable settings

- AI can be disabled per assignment, course, or instructor

Objection 5: “But our data isn’t ready...”

We don’t have centralized knowledge. Won’t AI just spread confusion?

- LearnWise is designed to work with incomplete data: You can launch with 10–15 high-value sources (e.g., SharePoint pages, LMS help docs, syllabi, policy PDFs) and grow your knowledge base as you go.

- Built-in knowledge gap detection flags missing or conflicting info

- Content governance tools let you approve, restrict, or auto-update sources

- Feedback analytics highlight where students are asking about unclear policies

- Progressive enhancement model: launch with what you have, improve weekly

Institutions often launch in phases, e.g., only with academic support or enrollment FAQs,before expanding to broader content.

Objection 6: “How do we know what the AI is doing?”

Can we see how it works? Can we change how it behaves? The short answer: Yes.

- System prompts are editable: You control tone, instructions, and disclaimer language

- Behavior audit logs track responses, usage volume, user flags, and feedback over time

- Faculty can preview and test AI before rolling it out in live classes

- Version control allows administrators to review changes to AI behavior prompts and ingestion sources

- No training on institutional data,all AI responses are context-retrieved only

LearnWise supports red-teaming, sandbox testing, and prompt reviews during procurement and implementation phases.

Objections Aren’t Roadblocks,They’re Readiness Signals

In every successful LearnWise deployment, questions like these were raised. They were signals that leadership teams were taking AI adoption seriously.

What matters is having clear, evidence-based answers. With documentation, references, sandbox access, and proof of value from peer institutions, LearnWise helps institutions turn objections into commitments.

Chapter 7: AI Adoption in Education Toolkits & Templates

AI readiness isn't just about strategy,it's about execution. Even with buy-in, budget, and the right platform, institutions often stumble in the “last mile” of launch because they lack practical tools: RFP templates, policy language, training plans, or KPIs.

This chapter gives you plug-and-play assets to help accelerate institutional adoption with clarity, control, and credibility.

RFP/RFI Vendor Checklist

When evaluating AI platforms, these must-have capabilities ensure ethical, scalable, and sustainable deployment:

Core Capabilities

- LMS-native integration (Canvas, Brightspace, Moodle, Blackboard)

- LMS data ingestion (not just API pings,real content usage)

- Role-awareness (differentiates student, instructor, staff)

- Rubric-aligned feedback tools

- Ticketing system escalation (e.g., Zendesk, Salesforce)

Knowledge Management

- Central knowledge ingestion from SharePoint, LMS, web, PDFs

- Knowledge gap detection and improvement suggestions

- Admin control over what content is used and how

- Feedback flagging and editing

Security & Compliance

- ISO 27001 / SOC2 / GDPR compliant

- Sub-processor list and data residency transparency

- SSO (SAML or OAuth2) and RBAC

- No training of LLMs on institutional data

Governance & Control

- System prompt control (editable behavior)

- Version control of prompts and ingestion logs

- Interaction audit logs for student and faculty prompts

- Institution-wide analytics dashboard

Support Model & Deployment

- Deployment within 3–6 weeks

- Sandbox access before signing

- Dedicated implementation and success teams

- Faculty development & training resources provided

Policy & Syllabus Language Templates

Clear, consistent language builds trust and protects academic integrity. Below is an example of a ready-to-use syllabus clause and institutional AI policy snippet.

Syllabus Statement for AI Use Example:

AI Use in This Course:

In this course, students may use institution-approved AI tools (e.g., LearnWise Feedback or Tutor) to supplement their understanding of course content. These tools provide explanations, resources, and suggestions but do not replace your own original thinking or work. Misuse, such as using AI to submit answers or generate essays without attribution,may constitute academic misconduct.

Institutional Policy Snippet Example:

AI Governance Policy (Excerpt):

Approved AI tools must comply with institutional standards of privacy, academic integrity, and accessibility. Only tools listed on the [Institution AI Resource Hub] are authorized for academic use. All AI-generated responses should be reviewed by humans prior to use in official communications or assessments.

Students and staff should report any AI behavior that appears biased, inaccurate, or inappropriate via [reporting mechanism].

Institutions should offer faculty editable templates that can be added to LMS course shells, syllabi, and student orientations.

Human-Centered AI in Education Starts This Fall

AI in higher education isn’t coming, it’s already here. The difference between those who succeed and those who struggle in 2025 will come down to readiness.

This guide has shown that institutional AI success depends on three core principles:

- Make governance visible.

Create cross-functional teams. Publish policies. Educate everyone. Don’t hide your AI stance, lead with it. - Meet students and faculty where they are.

Put AI tools in the LMS. Make them accessible, contextual, and ethical. Respect roles. Preserve agency. - Use analytics to improve, not just operate.

Track deflection, resolution time, usage patterns, and knowledge gaps. Let data inform your support playbooks and service design.

The LearnWise Advantage

LearnWise exists to help institutions move fast, build trust, and sustain impact. With native integrations across your campus systems including LMSs such as Brightspace, Canvas, Moodle, and Blackboard, and use cases spanning academic feedback, tutoring, and support, LearnWise is uniquely positioned to help institutions:

- Deploy in weeks, not months

- Launch with limited staff time

- See measurable outcomes in the first term

Ready to Act?

Whether you're exploring RFPs, planning your AI policy rollout, or seeking guidance on ethical grading tools, this whitepaper gives you the frameworks, playbooks, and templates to move forward today, not next school year.

Start small or start wide, but start now.

.png)

.webp)

%20(1).webp)

%20(1).webp)