Key Takeaways from the AI Readiness Community of Practice – UK & Ireland Education Leaders

Last week, education leaders across the UK and Ireland came together for our AI Readiness Community Practice, an community session focused on helping HE and FE institutions align AI integration with their strategic goals. From frameworks to vendor vetting and culture change, we spotlighted real examples, tools, and blueprints to guide institutions forward. Below are our key takeaways and resources. Want to join our next session? Get in touch.

How to Align AI to Strategic Goals in Higher Education?

The session opened by framing AI readiness around concrete institutional priorities: TEF outcomes, NSS scores, and digital transformation strategies.

AI is already reshaping core student experience areas:

- Personalized learning paths through adaptive platforms

- Accelerated feedback cycles with automated assessment

- Accessible learning resources through multilingual, assistive tools

Institutions reported a 15–25% improvement in learning opportunity satisfaction, a 20–30% boost in feedback quality, and up to a 28% gain in perceived resource relevance.

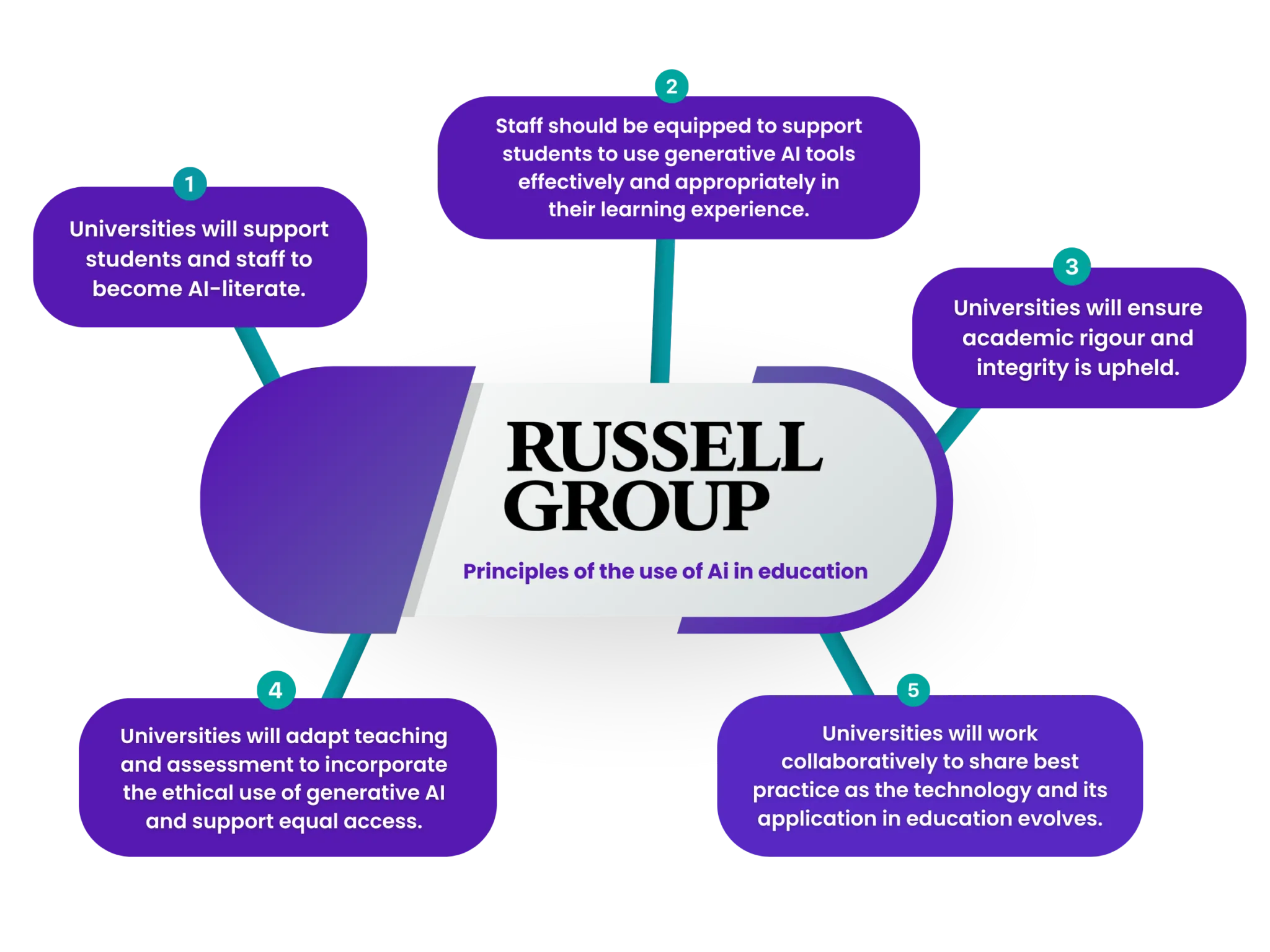

AI adoption cannot happen in isolation from institutional strategy. Alignment with metrics like NSS (National Student Survey), TEF (Teaching Excellence Framework), and digital transformation plans is essential. Rather than viewing AI as a bolt-on tool, institutions should connect it directly to outcomes like student satisfaction, operational efficiency, and teaching innovation. AI’s potential to improve retention, automate routine processes, and boost satisfaction scores can be a catalyst for unlocking budget and internal buy-in.

The reality is, most institutions are already sitting on AI-adjacent initiatives - whether in assessment automation or course personalization. What’s missing is often a governance wrapper and strategic framing. Education leaders audit their existing practices and map them to student outcomes—using a simple Venn of where cost-efficiency meets student experience as a planning tool.

.webp)

Key Recommendations:

- Map AI pilots directly to NSS domains and TEF criteria

- Pair student-focused innovations with institutional automation gains

- Use AI maturity models (like Jisc’s) to benchmark and track progress

How to Vet AI Solutions: Going Beyond GPT

The second challenge discussed was how to evaluate AI tools—particularly as vendor offerings flood the market. Panelists emphasized selecting solutions that go beyond vanilla GPT to deliver institutional ROI.

How can institutions distinguish between marketing hype and practical AI value? We introduced the “Value-over-GPT” checklist: institutions should ask whether AI platforms integrate into SIS, LMS, or CRM systems, provide guardrails like system prompt control and review workflows, and respect data sovereignty (e.g., ability to export and analyze chatbot interactions). Without these foundations, institutions risk locking themselves into “black box” vendors or unscalable pilots.

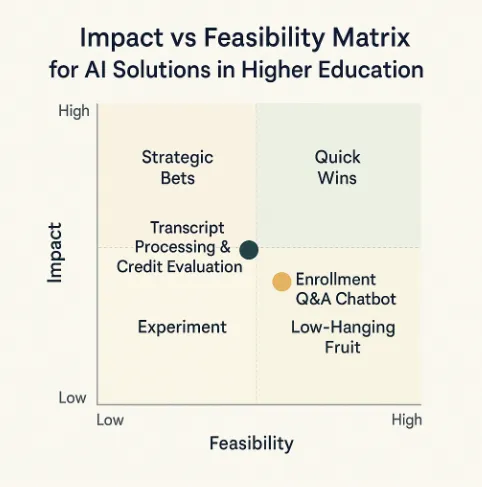

The conversation moved into practical deployment planning. We explained how AI adoption should follow an impact vs. feasibility matrix—encouraging institutions to begin with high-volume, low-complexity processes like admissions queries or transcript automation. Institutions should ask for live references and evaluate vendors on whether they enable real-time iteration. The goal is not just adoption—it’s building an adaptive, measurable AI ecosystem on campus.

What to look for:

- Deep integrations with SIS, LMS, CRM

- Guardrails like editable system prompts, approval workflows, and human-in-the-loop checkpoints

- Data control: sovereignty, exportability, and analytics

- Flexible cost models: consider TCO, not just usage pricing

- Proven ROI: has the vendor delivered results on a real campus?

.webp)

Change Management & Institutional Culture

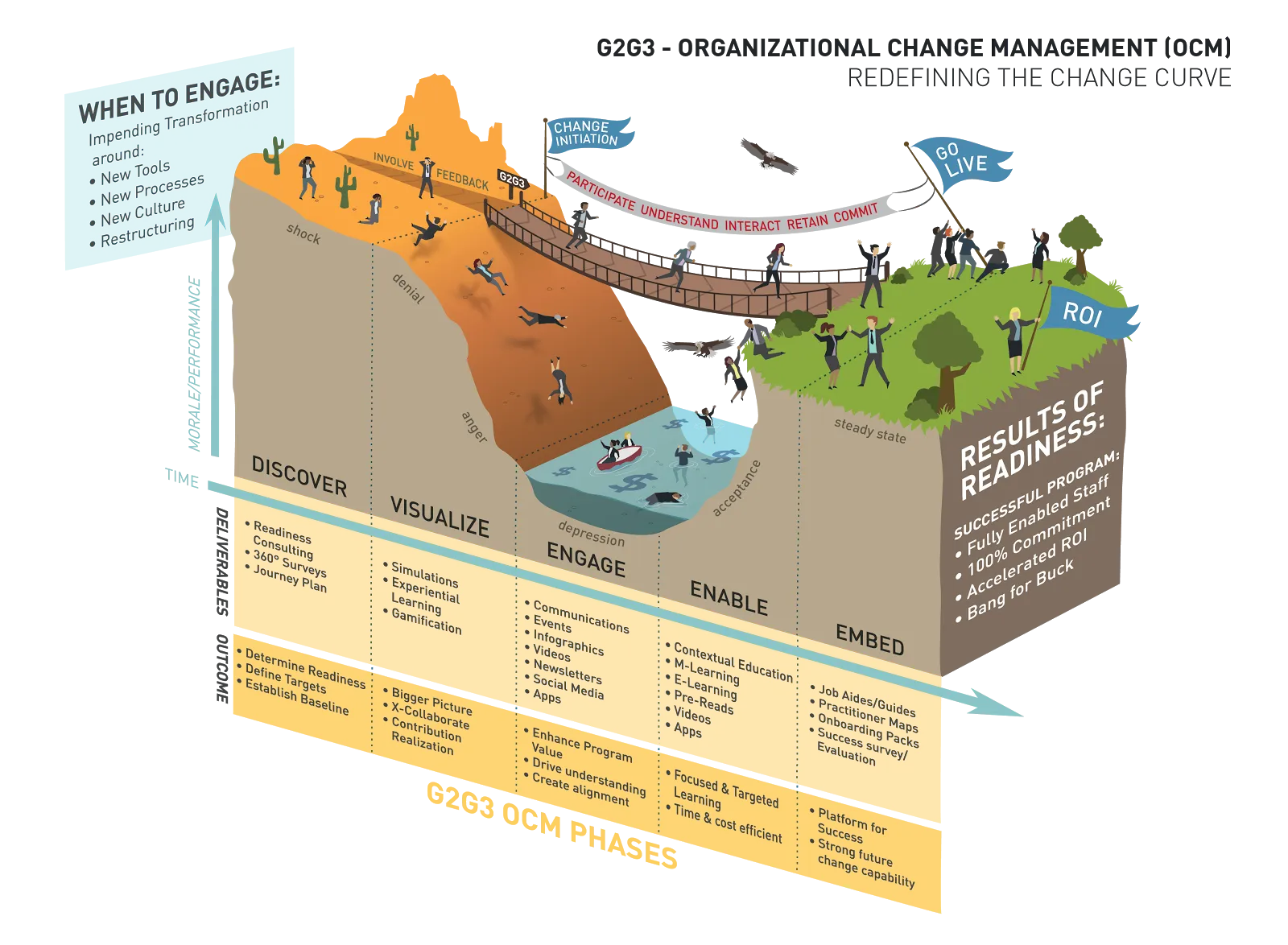

AI integration isn’t just technical, it’s deeply cultural. The third part of the session tackled change management and AI literacy.

The focus shifted to people, not platforms. We outlined how student and staff adoption is a major make-or-break factor for AI initiatives. With 92% of UK students and 61% of staff already using Gen-AI tools (often informally), the conversation framed the need to move from passive to informed use. Cultural blockers identified included “AI guilt” among students, ethical uncertainty, and staff worries around fairness, bias, and privacy. But as we emphasized, these are not roadblocks; they’re starting points for engagement.

We encourage institutions to involve students and educators early and stressed the value of transparency: explain why and how AI is being used, not just what tool is being introduced. The University of Gloucestershire’s “Nova” assistant was cited as an example of student-facing AI framed around academic advising, accessible 24/7, and introduced with clarity around limitations and support structure.

- Direct student and faculty involvementInvolving learners and educators early

- Intensive applications: needs assessment, focus groups, hackathons, test labs

- Non-intensive: naming competition, polls, makes for a soft landing when AI technology is implemented

AI in Higher Education Adoption Trends:

- 92% of UK university students now use Gen-AI tools

- 61% of faculty have tried Gen-AI

- 93% of staff expect to scale usage within 2 years

Sources: Ellucian AI in Higher Education Professionals Survey (2024), Digital Education Council’s Global AI Faculty Survey (2025), HEPI Student Generative AI Survey 2025

Key Cultural Barriers to AI Adoption in Higher Education:

- Student concerns: academic integrity, "AI guilt"

- Staff concerns: bias in AI systems, data privacy

Actionable Practices:

- Co-design AI initiatives with students and faculty

- Run focus groups, test labs, and soft activities (like naming competitions or polls)

- Foster trust with transparent policies, inclusive access, and frequent communication

Resources to Support Institutional AI Governance

We shared a curated set of blueprints and policy frameworks to help institutions build their own AI governance strategies:

- Jisc AI Maturity Toolkit for Tertiary Education

- Generative AI Student Guidance for FE (National Centre for AI)

- University of Edinburgh Gen-AI Guidance

- DCU AI Position Statement

- University of Birmingham Education Excellence Hub

Access all of these in one place via our resource link: Download the Blueprint PDF

An Invitation

Want to join our next community session? Get in touch.

About LearnWise

LearnWise AI empowers universities to enhance student support with an always-available, AI-powered assistant that integrates seamlessly across 400+ EdTech tools. Designed to meet institutions at any stage of their AI adoption journey, LearnWise optimizes student engagement, reduces operational costs, and provides instant, context-aware assistance across multiple platforms. Trusted by leading institutions worldwide, LearnWise has streamlined services for over 1M students, cutting support queries by half and transforming the learning experience.

.png)

.webp)

.webp)

%20(1).webp)

%20(1).webp)